What Are AI Agentic Workflows?

AI Agentic Workflows are business processes that use Large Language Models (LLMs) as intelligent agents that:

- ️ Plan, decide, and execute complex multistep tasks

- ️ Collaborate with tools (api's, databases, internet search)

- ️ Adapt dynamically to new data, feedback, and business rules

AI Agentic Workflows transform AI from being a passive assistant into an active operator — analyzing data, executing logic, sending reports, and integrating across systems automatically.

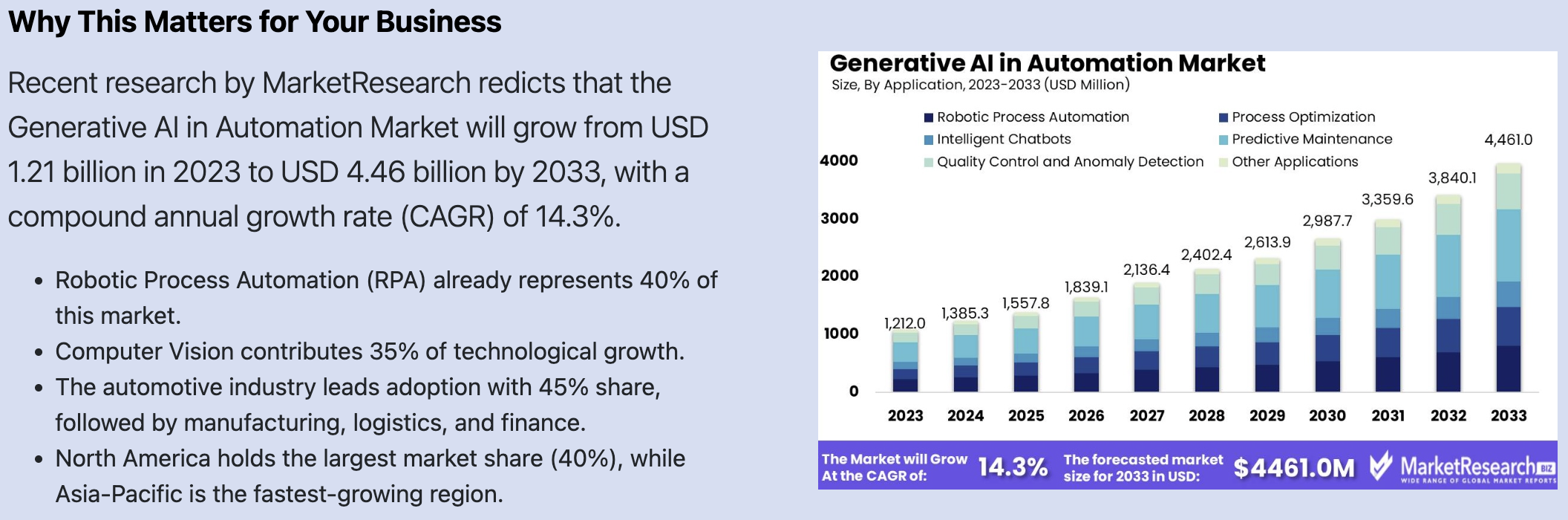

These numbers signal that automation enhanced by AI is not a future vision — it’s a present competitive necessity.

Business Impact of Implementing LLM Agentic Workflows

- Productivity: Automate repetitive workflows (reports, analytics, document processing)

- Efficiency: Reduce human bottlenecks — agents coordinate tools, data, and APIs autonomously

- Scalability: Scale operations without scaling headcount

- Insight Generation: Combine RAG + LLMs to analyze, predict, and explain business data in real time

- Innovation: Free teams to focus on strategy and creativity instead of manual execution

Implementing LLM Agentic Workflows transforms how a business operates — shifting from manual, human-dependent processes to autonomous, insight-driven systems.

The Strategic Advantage

Implementing LLM-driven Agentic Workflows positions your business to:

- Operate continuously with autonomous intelligence

- React instantly to changing data and market conditions

- Deliver faster, more accurate decisions

- Build a competitive moat through proprietary automation and knowledge integration

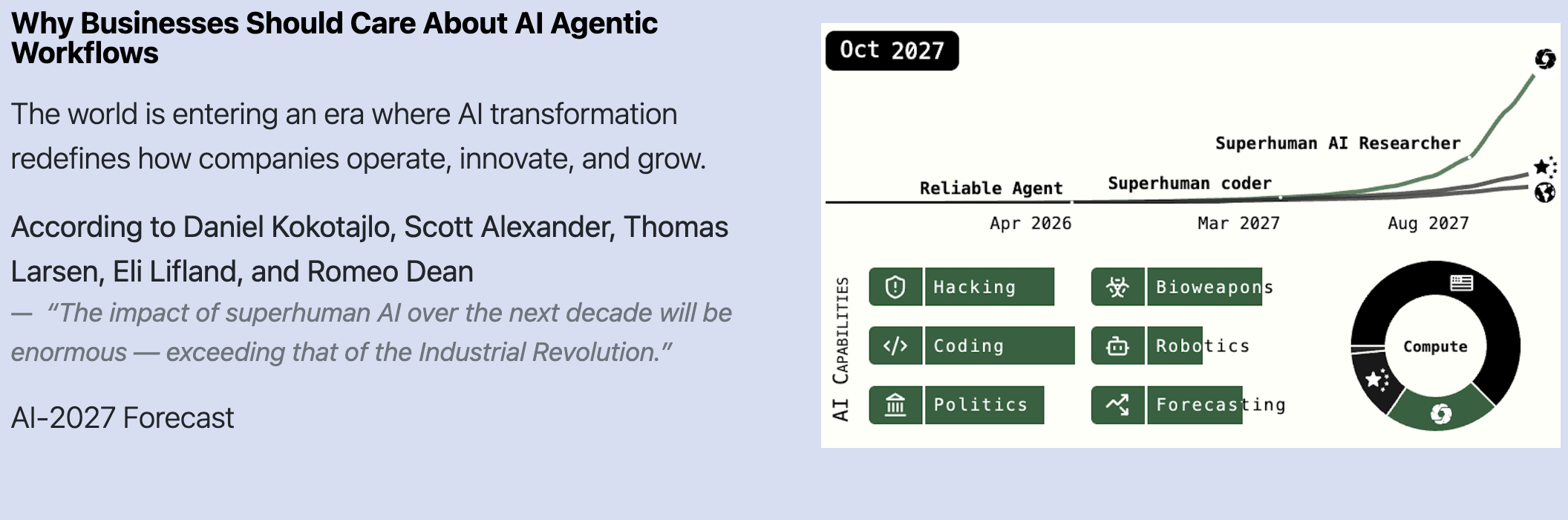

Just as the Industrial Revolution transformed manual labor into mechanical efficiency, Agentic AI is now transforming digital labor into cognitive automation — the next leap in business evolution.

Businesses that integrate AI Agentic Workflows today are not merely adopting a technology — they are preparing for a new economic paradigm. The convergence of LLMs, RAG, and automation is redefining productivity, decision-making, and growth across industries.

Our Solutions

Chat Assistant for Website

Transform a static website into an interactive, intelligent communication channel powered by a LLM and enhanced with Retrieval-Augmented Generation.

Explore »

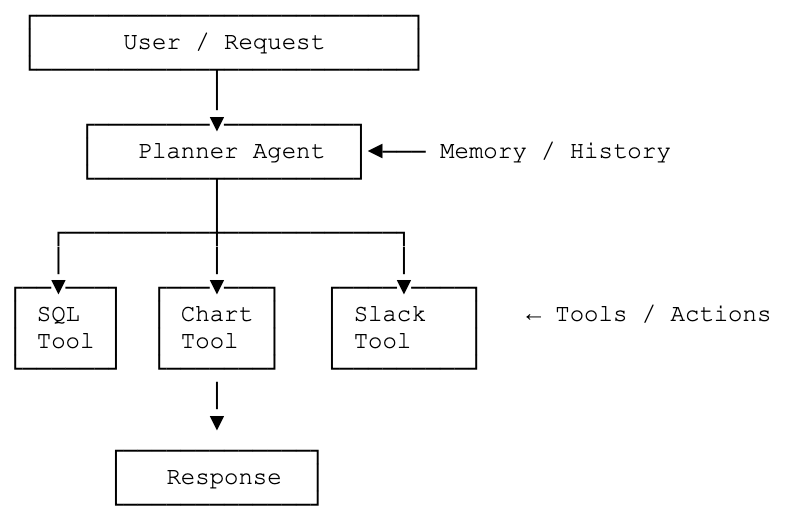

Data Analytics Agent

Build intelligent system that allows teams to query, analyze, and interpret large datasets using natural language — without needing direct SQL or dashboard expertise.

Explore »

RAG Agents

Combine reasoning and generation capabilities of LLMs with the precision and grounding of external knowledge retrieval — from databases, document stores, APIs, or structured data sources.

Explore »

Agentic Workflows

Build multistep, autonomous processes powered by LLM with reasoning, memory, and tool-using capabilities. This allows agents to collaborate or chain tasks together to achieve complex goals.

Explore »Online Chat Assistant for Website

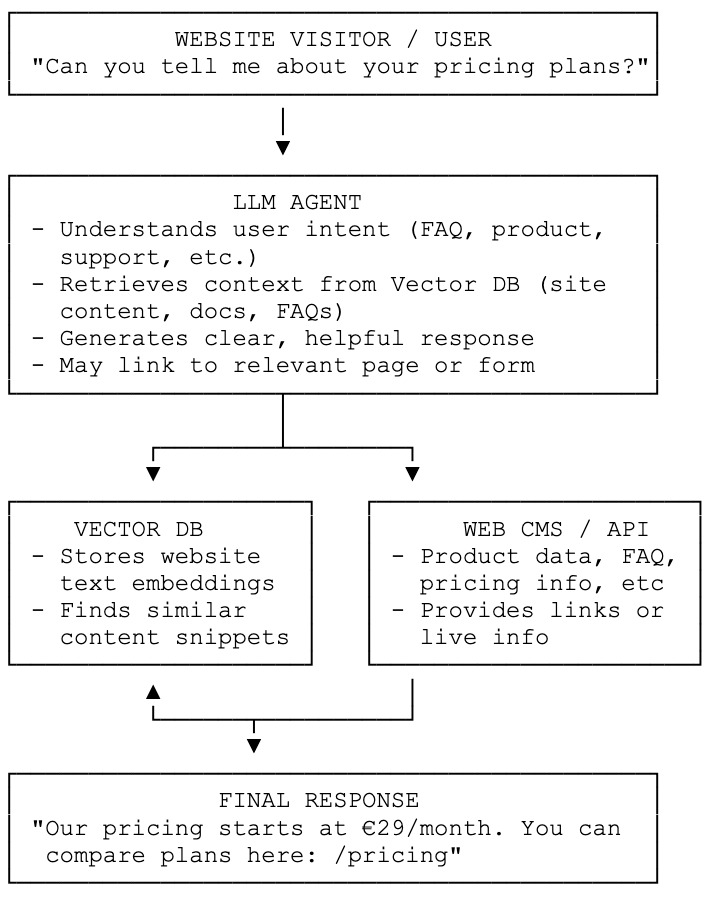

An Online Chat Assistant transforms a static website into an interactive, intelligent communication channel powered by a Large Language Model (LLM) and optionally enhanced with Retrieval-Augmented Generation (RAG).

Instead of visitors passively browsing pages, the assistant engages users conversationally, answers their questions instantly, and guides them toward actions that matter — reading key content, filling forms, scheduling demos, or making purchases.

- ️ Users must manually navigate menus and read long pages

- ️ Information can be hard to find, outdated, or confusing

- ️ Conversion opportunities (sign-ups, sales, support resolutions) are often lost

- Answers questions instantly

- Understands user intent (not just keywords)

- Guides users directly to the right product, service, or document

- Works 24/7 and scales infinitely

- Adapts to tone and context, improving user satisfaction

- Improve SEO and engagement by keeping users longer on-site

- Build trust and professionalism through accurate, conversational guidance

| Component | Function |

|---|---|

| LLM Agent | Chat interface with intent recognition, context retrieval, and response generation |

| Vector DB | Holds embeddings of website pages, FAQs, manuals for semantic retrieval |

| CMS / API | Provides structured data such as pricing, contact info, or product details |

| File Store (optional) | Hosts downloadable brochures, PDFs, or guides linked in chat |

An AI Chat Assistant with LLM + RAG turns your website from a static brochure into a living, conversational experience. It understands your visitors, retrieves the right information from your data, and communicates it naturally — improving user experience, trust, and business results.

AI Data Analytics Agent

An AI Data Analytics Agent is an internal, intelligent system that allows teams to query, analyze, and interpret large datasets using natural language — without needing direct SQL or dashboard expertise.

It combines a Large Language Model (LLM) with data access tools (SQL, Elasticsearch, APIs) and analytical modules for data exploration, prediction, and reporting.

- “Show me sales growth by region over the last three quarters.”

- “Which marketing channels brought the highest ROI this quarter?”

- “Generate a performance summary for product category X.”

- “Show correlation between ad spend and conversion rate.”

- Modern organizations collect massive amounts of data

- Most employees can’t write SQL or navigate complex BI dashboards

- Analysts spend hours preparing repetitive reports

- Data insights are siloed, delayed, and often overlooked

- Turning data into natural answers, not raw tables

- Saving time and analyst effort

- Making insights accessible across the organization

- Improving data-driven decision-making speed

| Component | Function | |

|---|---|---|

| 🧠 | LLM Agent | Understands natural language queries, plans multistep analysis, explains results |

| ⛁ | SQL / Elasticsearch Tools / API | Fetch structured or unstructured data efficiently from internal data sources |

| 🛠️ | Analytics Engine | Performs calculations, aggregations, statistical modeling, and trend detection |

| 📊 | Visualization Layer | Builds charts, tables, and dashboards for clear understanding |

| 📝 | Memory / Context Store | Keeps session context for multi-turn analytical conversations |

AI Data Analytics Agent transforms internal data access into a conversational experience. It acts as an intelligent data analyst — understanding questions, retrieving relevant data, performing analysis, and delivering accurate, visual reports instantly. Powered by LLM reasoning and data tools like SQL and Elasticsearch, it turns corporate data into actionable knowledge for every team.

RAG Agents

Retrieval-Augmented Generation (RAG) Agent Systems combine the reasoning and generation capabilities of large language models (LLMs) with the precision and grounding of external knowledge retrieval — from vector databases, document stores, APIs, or structured data sources.

They extend traditional LLMs by giving them access to facts, documents, and context beyond their training data, allowing accurate, up-to-date, and verifiable answers.

- Retrieve relevant information from external sources

- Incorporate that context into reasoning or generation

- Produce grounded, fact-aware, and source-referenced responses

- Operates entirely within your private network — guaranteeing full data privacy and regulatory compliance

- Can be deployed locally on enterprise servers or modern laptops (MacBook Pro M4 or equivalent) for complete flexibility

- Optimized for fast, context-aware retrieval — enabling instant access to accurate, cited information from your internal knowledge base

| Component | Function | |

|---|---|---|

| 🧠 | Reasoner Agent | Understands user query and decides what to retrieve |

| 🛠 | Retriever | Connects to vector store, search API, or database to fetch relevant documents or facts: Qdrant, Elasticsearch, SQL, Web Search |

| ⛁ | Memory | Stores prior queries, embeddings, user context, and retrieval cache: Redis, Postgres, vector DB |

RAG Agent Systems turn LLMs into fact-aware, retrieval-empowered agents. They plan queries, fetch knowledge, build context, generate grounded answers, and self-verify their completeness — forming a closed-loop intelligence cycle where reasoning and retrieval continually reinforce each other.

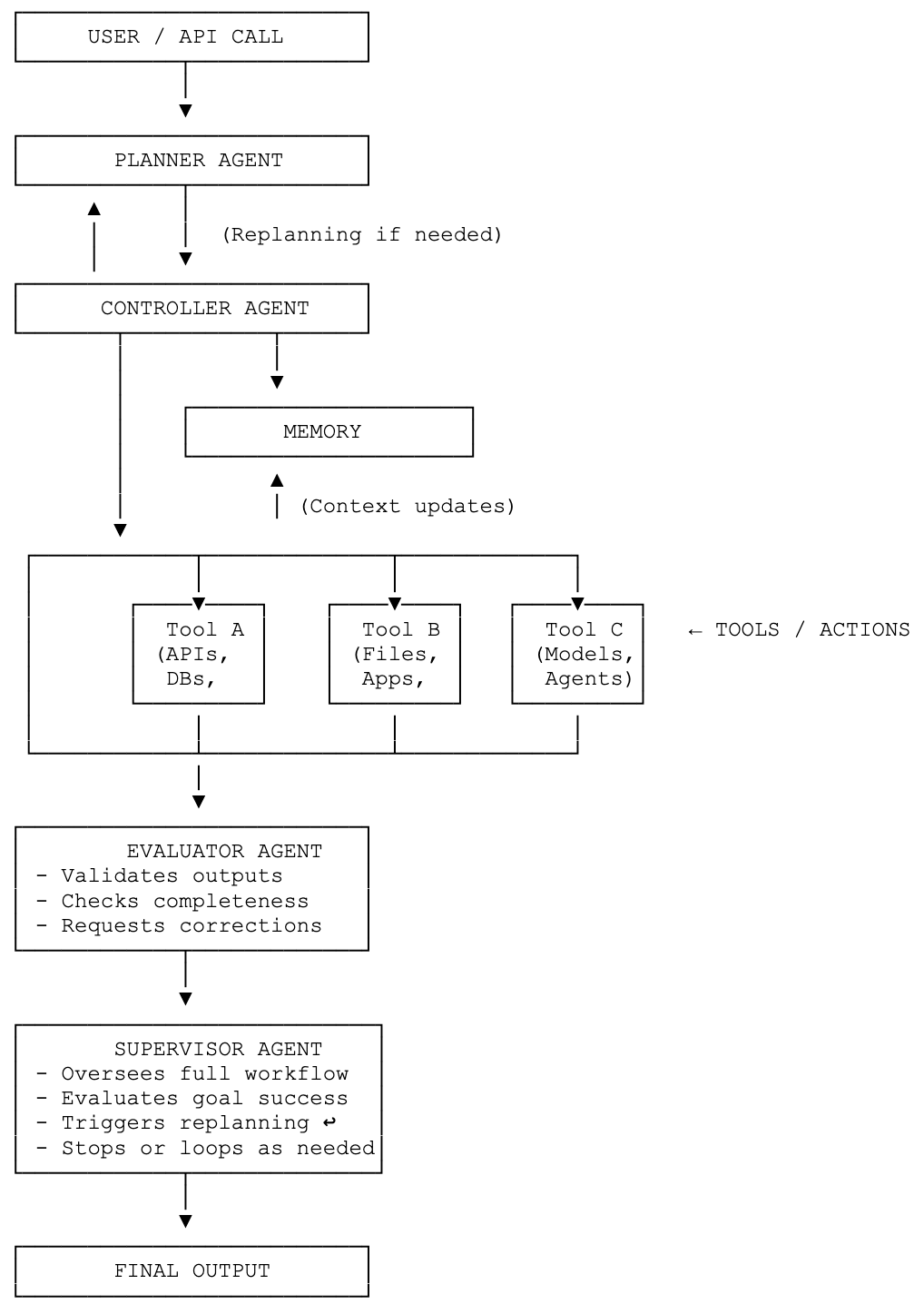

AI Agentic Workflows

AI Agentic Workflows are multi-step, autonomous processes powered by large language models (LLMs) with reasoning, memory, and tool-using capabilities. They allow agents to collaborate or chain tasks together to achieve complex goals.

Agentic AI systems act like autonomous employees — they plan, decide, and execute multistep tasks using tools and memory. Instead of simply generating text, they focus on getting things done.

Unlike the traditional “one prompt → one response” pattern, agentic workflows are goal-driven and interactive with their environment — such as APIs, databases, users, or external applications.

- What to do next

- Which tool to use

- How to interpret results

- When to ask for permission or human feedback

- When to stop

| Component | Function | |

|---|---|---|

| 🛠 | Tools | Interfaces the agent can call to perform real actions: SQL queries, web search, send emails, generate PDFs/CSVs, draw charts, etc. |

| ⛁ | Memory | Storage of past context, states, and results — preserves conversation history, vector memory, and workflow state. |

| 🌐 | Environment | The world the agent interacts with: Databases, APIs, files, emails, chat, sensors, etc. |

| 🧠 | Planner | LLM module that thinks ahead, decomposing a goal into steps or subtasks. |

| 🧠 | Controller | LLM or orchestration module that manages execution flow: decides what to run next, handles state, errors, and transitions. |

| 🧠 | Supervisor | LLM module which oversees correctness: monitors multiple agents or steps, evaluates progress, and ensures the goal is achieved. |

| 🧠 | Evaluator | Program or LLM module which verifies output quality and completeness: checks if each result satisfies the user’s intent. |

AI Agentic Workflows transform LLMs from passive text generators into autonomous, goal-oriented systems capable of planning, acting, reasoning, and verifying — effectively functioning as intelligent collaborators rather than static assistants.

Questions & Answers

About us

We are the team of experienced software engineers with over a decade of hands-on expertise in building, scaling, and maintaining enterprise-grade Java applications.

Our background spans large-scale distributed systems, data-driven platforms, and automation-focused architectures — always delivered with precision, performance, and quality.

Throughout the years, we have successfully led and implemented numerous state-of-the-art solutions for global clients across industries such as e-commerce, analytics, and fintech.

Our projects consistently earned positive feedback for their reliability, scalability, and long-term maintainability.

As developers, we value:

- Clean, testable, and automated code — every solution we build is crafted with quality assurance at its core

- Cutting-edge technology — from modern Java stacks and Spring Boot microservices to AI-driven workflows and cloud automation

- ️ Transparency and partnership — we work closely with clients to transform complex challenges into elegant, maintainable solutions

After years of experience in enterprise development, we founded this startup with one clear goal — to bring our collective expertise, engineering discipline, and passion for innovation to a broader audience.

We believe in blending proven enterprise reliability with modern intelligent automation, helping businesses evolve faster, operate smarter, and build systems that last.

Our mission: deliver robust, intelligent, and future-ready software — built by professionals who’ve done it, proven it, and perfected it.

Don't hesitate to reach us

Dennen Software Services BV

BE 1016.190.806

2610 Antwerpen

Thank you!

Your message was successfully sent.

We will get back to you shortly.